The U.S. Olympic men’s and women’s sprinting teams have won more gold medals than any other country in history, but the men’s 4×100-meter relay team has suffered four blistering defeats in the past two decades. Why? An absolute whiff at the critical point when a runner has to instinctively reach back and trust their squadmate enough to perfectly place the baton in their hand.

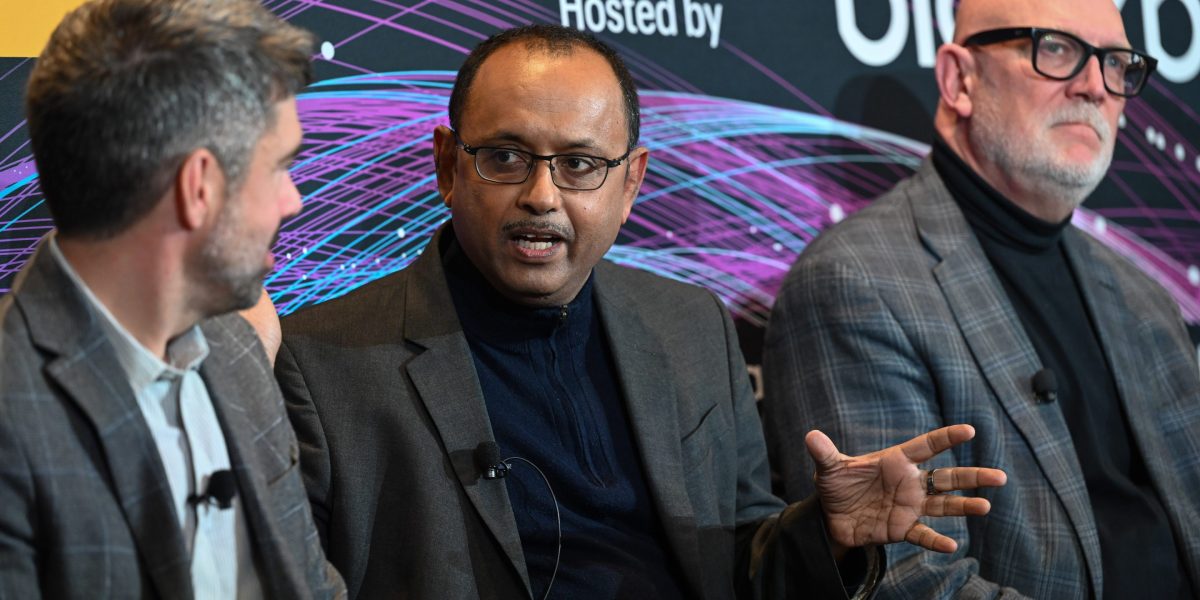

Sudip Datta, chief product officer at AI-powered software firm Blackbaud, said that image captures exactly what’s taking place in AI today. Companies are advancing swiftly to build the fastest and most powerful systems they can, but there’s a severe lack of trust between the technology and the people using it, causing any new innovation or efficiencies to completely fumble at the handoff.

“How many times did the U.S. have the fastest athletes, but ended up losing the 4×100 relay?” Datta asked an expert roundtable audience at Fortune’s Brainstorm AI event in San Francisco this week. “Because the trust was not there, where the runner would blindly take it from someone who is passing the baton.”

Datta said the reflexive reach backward on faith alone is what will separate the winners from the losers in AI adoption. And a major challenge looming in building trust is that a lot of companies today treat trust-building as a compliance burden that slows everything down. The opposite is true, he told the Brainstorm AI audience.

“Trust is actually a revenue driver,” said Datta. “It’s an enabler because it propels further innovation, because the more customers trust us, we can accelerate on that innovation journey.”

Scott Howe, president and CEO of data collaboration network LiveRamp, outlined five conditions that need to be met in order to build trust. Regulation has done a reasonable job in setting up the first two but “we still have a long way to go” on the remaining three, he said. The five conditions include: Transparency into how your data is going to be used; control over your data; an exchange of value for personal data; data portability; and finally, interoperability. Regulations including the EU’s General Data Protection Regulation (GDPR) have secured some minimal progress but Howe said most people don’t “get nearly fair value for the data we contribute.”

“Instead, really big companies, some of whom are speaking on stage today, have scraped the value and made a ton of money,” said Howe. “And then the last two, as an industry and as businesses, we are nowhere on.”

Owning the data

In Howe’s vision of the future, he sees data being viewed as a property right and people being entitled to fair compensation for its use.

“The LLMs don’t own my data,” said Howe, referring to large language models. “I should own my data and so I should be able to take it from Amazon to Google, and from Google to Walmart if I want, and it should travel with me,”

However, major tech companies are actively resisting portability and interoperability, which has created data silos that entomb customers in their current ecosystems, said Howe.

Beyond personal data and potential consumer rights issues, the trust challenge takes on a different shape inside various companies, and each has to decide what their own AI systems can safely access and which tasks can be completed autonomously.

Spencer Beemiller, innovation officer at software company ServiceNow, said the firm’s customers are trying to determine which AI systems can operate without human oversight, a question that remains largely unanswered. He said ServiceNow helps organizations track their AI agents the same way they’ve historically monitored infrastructure by tracking what the systems are doing, what they have access to, and their lifecycle.

“We’re trying to get a little bit of a grasp on helping our customers determine what points actually matter to create that autonomous decision making,” Beemiller said.

Issues like hallucinations, where an AI system will confidently provide made-up or inaccurate information in response to a question, require significant risk mitigation processes, he said. ServiceNow approaches it by using what Beemiller called “orchestration layers,” in which queries are directed to specialized models. Small language models handle enterprise-specific tasks that require more precision, while larger models manage natural conversational items, he said.

“So it’s a little bit of a ‘Yes, and’ conversation of certain agent components will talk to specific models that are only trained on internal data,” he said. “Others called up from the orchestration layer will abstract to a larger model to be able to answer the problem.”

Still, many fundamental issues remain unresolved, including questions about cybersecurity, critical infrastructure, and the potentially catastrophic consequences that could stem from AI errors. And even more so than in other areas of tech, there’s an inherent tension between moving fast and getting it right.

“If we can win the trust, speed follows,” Datta said. “It’s not about only running fast, but also having trust along the way.”

Read more from Brainstorm AI:

Cursor developed an internal AI help desk that handles 80% of its employees’ support tickets, says the $29 billion startup’s CEO

AI is already taking over managers’ busywork—and it’s forcing companies to reset expectations

OpenAI COO Brad Lightcap says ‘code red’ will force the company to focus, as the ChatGPT maker ramps up enterprise push

Politics8 years ago

Politics8 years ago

Entertainment8 years ago

Entertainment8 years ago

Politics8 years ago

Politics8 years ago

Entertainment8 years ago

Entertainment8 years ago

Entertainment8 years ago

Entertainment8 years ago

Politics8 years ago

Politics8 years ago

Business8 years ago

Business8 years ago

Tech8 years ago

Tech8 years ago