Business

Inside the new open-source AI that helps anyone track a changing planet

Published

3 months agoon

By

Jace Porter

Welcome to Eye on AI, with AI reporter Sharon Goldman in for Jeremy Kahn, who is traveling. In this edition…a new open-source AI platform helps nonprofits and public agencies track a changing planet…Getty Images narrowly wins, but mostly loses in landmark UK lawsuit against Stability AI’s image generator…Anthropic is projecting $70 billion in revenue…China offers tech giants cheap power to boost domestic AI chips...Amazon employees push back on company’s AI expansion.

I’m excited to share an “AI for good” story in today’s Eye on AI: Imagine if conservation groups, scientists, and local governments could easily use AI to take on challenges like deforestation, crop failure, or wildfire risk, with no AI expertise at all.

Until now, that’s been out of reach—requiring enormous, inaccessible datasets, major budgets, and specialized AI know-how that most nonprofits and public agencies lack. Platforms like Google Earth AI, released earlier this year, and other proprietary systems have shown what’s possible when you combine satellite data with AI, but those are closed systems that require access to cloud infrastructure and developer know-how.

That’s now changing with OlmoEarth, a new open-source, no-code platform that runs powerful AI models trained on millions of Earth observations—from satellites, radar, and environmental sensors, including open data from NASA, NOAA, and the European Space Agency—to analyze and predict planetary changes in real time. It was developed by Ai2, the Allen Institute for AI, a Seattle-based nonprofit research lab founded in 2014 by the late Microsoft co-founder Paul Allen.

Early partners are already putting OlmoEarth to work: In Kenya, researchers are mapping crops to help farmers and officials strengthen food security. In the Amazon, conservationists are spotting deforestation in near real time. And in mangrove regions, early tests show 97% accuracy—cutting processing time in half and helping governments act faster to protect fragile coastlines.

I spoke with Patrick Beukema, who heads the Ai2 team that built OlmoEarth, a project that kicked off earlier this year. Beukema said the goal was to go beyond just releasing a powerful model. Many organizations struggle to connect raw satellite and sensor data into usable AI systems, so Ai2 built OlmoEarth as a full, end-to-end platform.

“Organizations find it extremely challenging to build the pipelines from all these satellites and sensors, just even basic things are very difficult to do–a model might need to connect to 40 different channels from three different satellites,” he explained. “We’re just trying to democratize access for these organizations who work on these really important problems and super important missions–we think that technology should basically be publicly available and easy to use.”

One concrete example Beukema gave me was around assessing wildfire risk. A key variable in wildfire risk assessment is how wet the forest is, since that determines how flammable it is. “Currently, what people do is go out into the forest and collect sticks or logs and weigh them pre-and-post dehydrating them, to get one single measurement of how wet it is at the location,” he said. “Park rangers do this work, but it’s extremely expensive and arduous to do.”

With OlmoEarth, AI can now estimate that forest moisture from space: The team trained the model using years of expert field data from forest and wildfire managers, pairing those ground measurements with satellite observations from dozens of channels—including radar, infrared, and optical imagery. Over time, the model learned to predict how wet or dry an area is just by analyzing that mix of signals.

Once trained, it can continuously map moisture levels across entire regions, updating as new satellite data arrives—and do it millions of times more cheaply than traditional methods. The result: near real-time wildfire-risk maps that can help planners and rangers act faster.

“Hopefully this helps the folks on the front lines doing this important work,” said Beukema. “That’s our goal.”

With that, here’s more AI news.

Sharon Goldman

sharon.goldman@fortune.com

@sharongoldman

If you want to learn more about how AI can help your company to succeed and hear from industry leaders on where this technology is heading, I hope you’ll consider joining Jeremy and I at Fortune Brainstorm AI San Francisco on Dec. 8–9. Among the speakers confirmed to appear so far are Google Cloud chief Thomas Kurian, Intuit CEO Sasan Goodarzi, Databricks CEO Ali Ghodsi, Glean CEO Arvind Jain, Amazon’s Panos Panay, and many more. Register now.

FORTUNE ON AI

Palantir quarterly revenue hits $1.2B, but shares slip after massive rally– by Jessica Mathews

Amazon says its AI shopping assistant Rufus is so effective it’s on pace to pull in an extra $10 billion in sales – by Dave Smith

Sam Altman sometimes wishes OpenAI were public so haters could short the stock—‘I would love to see them get burned on that’ – by Marco Quiroz-Guitierrez

AI empowers criminals to launch ‘customized attacks at scale’—but could also help firms fortify their defenses, say tech industry leaders – by Angelica Ang

AI IN THE NEWS

Getty Images mostly loses landmark UK lawsuit against Stability AI image generator. Reuters reported today that a London court ruled that Getty only narrowly succeeded, but mostly lost, in its case against Stability AI, finding that Stable Diffusion infringed Getty’s trademarks by reproducing its watermark in AI-generated images. But the judge dismissed Getty’s broader copyright claims, saying Stable Diffusion “does not store or reproduce any copyright works”—a technical distinction that lawyers said exposes gaps in the U.K.’s copyright protections. The mixed verdict leaves unresolved the central question of whether training AI models on copyrighted data constitutes infringement, an outcome that both companies claimed as a partial victory. Getty said it plans to use the ruling to bolster its parallel lawsuit in the U.S., while calling on governments to strengthen transparency and intellectual property rules for AI.

Anthropic projects $70 billion in revenue, $17 billion in cash flow in 2028. Anthropic, maker of the Claude chatbot, is projecting explosive growth—forecasting as much as $70 billion in revenue by 2028, up from about $5 billion this year, according to The Information. The company expects most of that growth to come from businesses using its AI models through an API—revenue it predicts will roughly double OpenAI’s comparable sales next year. Unlike ChatGPT-maker OpenAI, which is burning billions on computing costs, Anthropic expects to be cash-flow positive by 2027 and generate up to $17 billion in cash the following year. Those numbers could help it target a valuation between $300 billion and $400 billion in its next funding round—positioning the four-year-old startup as a financially efficient challenger to OpenAI’s dominance.

China offers tech giants cheap power to boost domestic AI chips. According to the Financial Times, China is ramping up subsidies for its biggest data centers—cutting electricity bills by as much as 50% for facilities powered by domestic AI chips—in a bid to reduce reliance on Nvidia and strengthen its homegrown semiconductor industry, according to the Financial Times. Local governments in provinces like Gansu, Guizhou, and Inner Mongolia are offering new incentives after tech giants including ByteDance, Alibaba, and Tencent complained that Chinese chips from Huawei and Cambricon were less energy-efficient and costlier to run. The move underscores Beijing’s push to make its AI infrastructure self-sufficient, even as the country’s data center power demand surges and domestic chips still require 30–50% more electricity than Nvidia’s.

Amazon employees push back on company’s AI expansion. Last week, a group of Amazon employees published an open letter warning that the company’s “warp-speed” push into artificial intelligence is coming at the expense of climate goals, worker protections, and democratic accountability. The signatories—who say they help build and deploy Amazon’s AI systems—argue that the company’s planned $150 billion data center expansion will increase carbon emissions and water use, particularly in drought-prone regions, even as it continues supplying cloud tools to oil and gas companies. They also criticize Amazon’s growing ties to government surveillance and military contracts, and claim that internal AI initiatives are accelerating automation without supporting worker advancement. The group is calling for three commitments: no AI powered by dirty energy, no AI built without employee input, and no AI for violence or mass surveillance.

AI CALENDAR

Nov. 10-13: Web Summit, Lisbon.

Nov. 26-27: World AI Congress, London.

Dec. 2-7: NeurIPS, San Diego

Dec. 8-9: Fortune Brainstorm AI San Francisco. Apply to attend here.

EYE ON AI RESEARCH

What if large AI models could read each other’s minds instead of chatting in text? That’s the idea behind a new paper from researchers at CMU, Meta AI, and MBZUAI called Thought Communication in Multiagent Collaboration. The team proposes a system called ThoughtComm, which lets AI agents share their latent “thoughts”—the hidden representations behind their reasoning—rather than just exchanging words or tokens. To do that, they use a sparsity-regularized autoencoder, a kind of neural network that compresses complex information into a smaller set of the most important features, helping reveal which “thoughts” truly matter. By learning which ideas agents share and which they keep private, this framework allows them to coordinate and reason together more efficiently—hinting at a future where AIs collaborate not by talking, but by “thinking” in sync.

BRAIN FOOD

How AI companies may be quietly training on paywalled journalism

I wanted to highlight a new Atlantic investigation by staff writer Alex Reisner, which exposes how Common Crawl, a nonprofit that scrapes billions of web pages to build a free internet archive, may have become a back door for AI training on paywalled content. Reisner reports that despite Common Crawl’s public claim that it avoids content behind paywalls, its datasets include full articles from major news outlets—and those articles have ended up in the training data for thousands of AI models.

Common Crawl maintains that it is doing nothing wrong. When pressed on publishers’ requests to remove their content, Common Crawl’s director, Rich Skrenta, brushed off the complaints, saying: “You shouldn’t have put your content on the internet if you didn’t want it to be on the internet.” Skrenta, who told Reisner he views the archive as a kind of digital time capsule—“a crystal cube on the moon”—sees it as a record of civilization’s knowledge. But no matter what, it certainly highlights the ever-growing tension between AI’s hunger for data and the journalism industry’s fight over copyright.

You may like

Business

Microsoft CEO Satya Nadella’s biggest AI bubble warning yet is a challenge to the Fortune 500

Published

23 minutes agoon

January 20, 2026By

Jace Porter

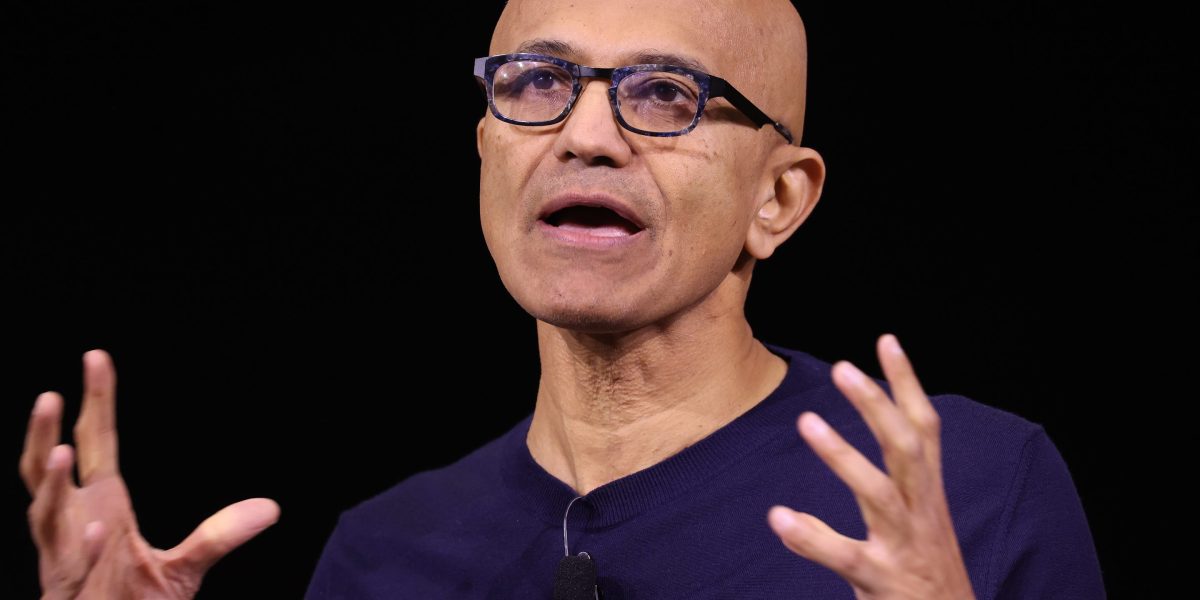

Microsoft CEO Satya Nadella has been leading the charge on artificial intelligence (AI) for years, owing to his long alliance with OpenAI’s Sam Altman and the groundbreaking work from his own AI CEO, Mustafa Suleyman, particularly with the Copilot tool. But Nadella has not spoken often about the fears that rattled Wall Street for much of the back half of 2025: whether AI is a bubble.

At the World Economic Forum annual meeting in Davos, Switzerland, Nadella sat for a conversation with the Forum’s interim co-chair, BlackRock CEO Larry Fink, explaining that if AI growth spawns solely from investment, then that could be signs of a bubble. “A telltale sign of if it’s a bubble would be if all we are talking about are the tech firms,” Nadella said. “If all we talk about is what’s happening to the technology side then it’s just purely supply side.”

However, Nadella offers a fix to that productivity dilemma, calling on business leaders to adopt a new approach to knowledge work by shifting workflows to match the structural design of AI. “The mindset we as leaders should have is, we need to think about changing the work—the workflow—with the technology.”

Growing pains

This change is not wholly unprecedented, as Nadella pointed out, comparing the current moment to that of the 1980s, when computing revolutionized the workplace and opened up new opportunities for growth and productivity and created a new class of workers. “We invented this entire class of thing called knowledge work, where people started really using computers to amplify what we were trying to achieve using software,” he said. “I think in the context of AI, that same thing is going to happen.”

Nadella argues that AI creates a “complete inversion” of how information moves through a business, replacing slow, hierarchical processes with a view that forces leaders to rethink their organizational structures. “We have an organization, we have departments, we have these specializations, and the information trickles up,” Nadella said. “No, no, it’s actually it flattens the entire information flow. So once you start having that, you have to redesign structurally.”

That shift may be harder for some Fortune 500 companies as structural changes could be accompanied by uncomfortable growing pains. Nadella says that leaner companies will be able to more easily adopt AI because their organizational structures are fresher and more malleable. On the other hand, large companies could take time to adopt new workflows.

Despite widespread adoption of AI, the 29th edition of PwC’s global CEO survey found that only 10% to 12% of companies reported seeing benefits of the technology on the revenue or cost side, while 56% reported getting nothing out of it. It follows up on an even more pessimistic finding about AI returns from August 2025: that 95% of generative AI pilots were failing.

PwC Global Chairman Mohamed Kande spoke to Fortune’s Diane Brady in Davos about the finding that many CEOs are cautious and lack confidence at this stage of the AI adoption cycle. “Somehow AI moves so fast … that people forgot that the adoption of technology, you have to go to the basics,” he explained, with the survey finding that the companies seeing benefits from AI are “putting the foundations in place.” It’s about execution more than it is about technology, he argued, and good management and leadership are really going to matter going forward.

“For large organizations,” Nadella told Fink, “there’s a fundamental challenge: Unless and until your rate of change keeps up with what is possible, you’re going to get schooled by someone small being able to achieve scale because of these tools.”

New entrants have the advantage of “starting fresh” and constructing workflows around AI capabilities, while larger firms will have to contend with the flattening effect AI has on entire departments and specializations.

To be sure, Nadella says that large organizations have kept an upper hand, especially when it comes to relationships, data, and know-how. However, he maintains that firms must understand how to use those resources to their advantage to change management style, then that could pose a major roadblock.

“The bottom line is, if you don’t translate that with a new production function, then you really will be stuck,” he said.

Business

BlackRock’s billionaire CEO warns AI could be capitalism’s next big failure after 30 years of unsustainable inequality after the Cold War

Published

54 minutes agoon

January 20, 2026By

Jace Porter

BlackRock CEO Larry Fink opened the World Economic Forum in Davos, Switzerland, with a stark message to the global elite: AI’s unfettered growth risks pummelling the world’s working and professional classes. Beyond that, he warned that it could be capitalism’s next big failure after a 30-year reign after the Cold War that has failed to deliver for the average human being in society.

In his opening remarks on Tuesday at the gathering of thousands of executives and global leaders, the billionaire boss of the world’s largest asset manager—often called one of Wall Street’s “Masters of the Universe”—said that as those in power discuss the future of AI, they risk leaving behind the vast majority of the world, just as they have for much of the last generation.

“Since the fall of the Berlin Wall, more wealth has been created than in any time prior in human history, but in advanced economies, that wealth has accrued to a far narrower share of people than any healthy society can ultimately sustain,” Fink said.

Fink, who has used his annual BlackRock letters and annual appearances at Davos to set the agenda for a more progressive kind of capitalism, even one that is arguably “woke,” making him at times the face of ESG and of stakeholder capitalism, warned that the gains of the tremendous wealth creation since the 1990s have not been equitably shared. And the capitalist ideology driving AI development and implementation forward could come at the expense of the wage-earning majority, he added.

“Early gains are flowing to the owners of models, owners of data and owners of infrastructure,” Fink said. “The open question: What happens to everyone else if AI does to white-collar workers what globalization did to blue-collar workers? We need to confront that today directly. It is not about the future. The future is now.”

Fink’s past critiques of capitalism

Fink, who was appointed interim co-chair of the World Economic Forum in August 2025, replacing founder Klaus Schwab, has long espoused the reshaping of capitalism, seeing it as a responsibility of large asset managers like himself. Fink was formerly vociferous about the importance of environmental, social, and corporate governance (ESG) investing, and has argued that climate change is reshaping finance, creating an imperative for executives to reallocate their capital to address the crisis accordingly. In a 2022 letter to investors, published the day before the Davos summit, Fink emphasized a model of “stakeholder capitalism” of a business’s mandate to serve not just shareholders, but employees, consumers, and the public.

Fink’s new primacy in Davos is the first without Schwab, following allegations that he had expensed more than $1 million, billed to the World Economic Forum, on questionable travel spending, as well as claims of workplace misconduct and research report manipulation. The BlackRock chief emphasized the need for the gathering to demonstrate its legitimacy in part by showing that it’s concerned with more than just swelling growth of companies and countries, but also the economic welfare of its employees and citizens.

“Many of the people most affected by what we talk about here will never come to this conference,” Fink said. “That’s a central tension of this forum. Davos is an elite gathering trying to shape a world that belongs to everyone.”

Though BlackRock announced in early 2025 it would roll back many of the diversity, equity, and inclusion goals it created a few years before, Fink has once again used his spotlight to call on leaders to transform their capitalist sensibilities, this time in how they imagine the AI future.

The cost of the AI boom

Last year capped an explosion of growth in the AI sector, with Morningstar analysts finding a group of 34 AI stocks, including Amazon, Alphabet, and Microsoft, shot up 50.8% in 2025. AI firms and investors have seen their wealth skyrocket in the past year, with Per the Bloomberg Billionaires Index, the median increase in net worth last year was nearly $10 billion among the 50 wealthiest Americans. Google co-founder Larry Page and Sergey Brin, for example, got $101 billion and $92 billion richer, respectively, in 2025.

The BlackRock CEO noted these gains, however, have been reserved for the richest few, alluding to a K-shaped economy of the rich getting richer, while the poor continue to struggle: The bottom half of Americans, in short, are not cashing in on the AI race. Although Fink didn’t get into the politics of utilities setting electricity prices, it seems the poor are actually paying higher bills to support the data centers powering the AI boom. According to Federal Reserve data, the poorer demographic owns about 1% of stock market wealth, translating to about 165 million people owning $628 billion in stock. Conversely, the top 1% of wealthiest households own nearly 50% of corporate equity.

Fink’s framing of the post-Cold War era as one of exploding inequality represents a mainstreaming of a once niche view that has become increasingly mainstream in the 21st century. While the triumph of the west over communism was seen as the ultimate victory for capitalism, as epitomized by Francis Fukuyama’s The End of History and the Last Man, history has in fact continued. The unprecedented rise of China as an economic superpower, through its fusion of socialism and capitalism “with Chinese characteristics,” has complicated the narrative, as has the inequality alluded to by Fink.

An internal critic of the post-Cold War world order is Andrew Bacevich, a military veteran and historian who likened the collapse of the Soviet Union in 1989 as “akin to removing the speed limiter from an internal combustion engine.” Bacevich’s 2020 book The Age of Illusions: How America Squandered Its Cold War Victory, was an early articulation of the once niche viewpoint that Fink lent support to on Tuesday.

What AI’s growth means for workers

Similarly, the risks of the AI boom on workers extends beyond who has a stake in the technology industry’s growth. Nobel laureate and “godfather of AI” Geoffrey Hinton has previously warned this explosion of wealth for the few will come at the expense of white-collar workers, who will be displaced by the technology.

“What’s actually going to happen is rich people are going to use AI to replace workers,” Hinton said in September. “It’s going to create massive unemployment and a huge rise in profits. It will make a few people much richer and most people poorer. That’s not AI’s fault, that is the capitalist system.”

Some companies have already leaned into culling headcount to grow profits, including enterprise-software firm IgniteTech. CEO Eric Vaughan laid off nearly 80% of his staff in early 2023, according to figures reviewed by Fortune. Vaughan said the reductions happened during an inflection point in the tech industry, where failure to efficiently adopt AI could be fatal for a company. He’s since rehired for all of those roles, and he would make the same choice again today, he told Fortune.

According to Fink, sustaining a white-collar workforce will depend on the world’s most powerful people creating an actionable plan that will defy the critiques of capitalism that has, so far, stood to predominantly benefit them.

“Now with abstractions about the jobs of tomorrow, but with a credible plan for broad participation in these gains, this is going to be the test,” Fink said. “Capitalism can evolve to turn more people into owners of growth, instead of spectators watching it happen.”

This story was originally featured on Fortune.com

Business

At Davos, AI hype gives way to focus on ROI

Published

1 hour agoon

January 20, 2026By

Jace Porter

Hello and welcome to Eye on AI. In this edition….a dispatch from Davos…OpenAI ‘on track’ for device launch in 2026…Anthropic CEO on China chip sales…and is Claude Code Anthropic’s ChatGPT moment?

Hi. I’m in Davos, Switzerland, this week for the World Economic Forum. Tomorrow’s visit of U.S. President Donald Trump is dominating conversations here. But when people aren’t talking about Trump and his imposition of tariffs on European allies that oppose his attempt to wrest control of Greenland from Denmark, they are talking a lot about AI.

The promenade in this ski town turns into a tech trade show floor at WEF time, with the logos of prominent software companies and consulting firms plastered to shopfronts and signage touting various AI products. But while last year’s Davos was dominated by hype around AI agents and overwrought hand-wringing that the debut of DeepSeek’s R1 model, which happened during 2025’s WEF, could mean the capital-intensive plans of the U.S. AI companies were for naught, this year’s AI discussions seem more sober and grounded.

The business leaders I’ve spoken to here at Davos are more focused than ever on how to drive business returns from their AI spending. The age of pilots and experimentation seems to be ending. So too is the era of imagining what AI can do. Many CEOs now realize that implementing AI at scale is not easy or cheap. Now there is much more attention on practical advice for using AI to drive enterprise-wide impact. (But there’s still a tinge of idealism here too as you’ll see.) Here’s a taste of some of the things I’ve heard in conversations so far:

CEOs take control of AI deployment

There’s a consensus that the bottom-up approaches—giving every employee access to ChatGPT or Microsoft Copilot, say—popular in many companies two years ago, in the initial days of the generative AI boom, are a thing of the past. Back then, CEOs assumed front line workers, closest to the business processes, would know how best to deploy AI to make them more efficient. This turned out to be wrong—or, perhaps more accurately, the gains from doing this tended to be hard to quantify and rarely added up to big changes in either the top or bottom line.

Instead, top-down, CEO-led initiatives aimed at transforming core business processes are now seen as essential for deriving ROI from AI. Jim Hagemann Snabe, the chairman of Siemens and former co-CEO at SAP, told a group of fellow executives at a breakfast discussion I moderated here in Davos today that CEOs need to be “dictators” in identifying where their businesses would deploy AI and pushing those initiatives forward. Similarly, both Christina Kosmowski, the CEO of IT and business data analytics company LogicMonitor, and Bastian Nominacher, the cofounder and co-CEO of process mining software company Celonis, told me that board and CEO sponsorship was an essential component to enterprise AI success.

Nominacher had a few other interesting lessons, including how, in research Celonis commissioned, establishing a center of excellence for figuring out how to optimize work processes with AI resulted in an 8x better return than for companies that failed to set up such a center. He also said that having data in the right place was essential to running process optimization successfully.

The race to become the orchestration layer for enterprise AI agents

There is clearly a race on among SaaS companies to become the new interface layer for AI agents that work in companies. Carl Eschenbach, Workday’s CEO, told me that he thinks his company is well-positioned to become “the front door to work” not only because it sits on key human resources and financial data, but because the company already handled onboarding, data access and permissioning, and performance management for human workers. Now it can do the same for AI agents.

But others are eyeing this prize too. Srini Tallapragada, Salesforce’s chief engineering and customer success officer, told me how his company is using “forward deployed engineers” at 120 of Salesforce’s largest customers to close the gap between customer pain points and product development, learning the best way to create agents for specific industry verticals and functions that it can then offer to Salesforce’s wider customer base. Judson Althof, Microsoft’s commercial CEO, said that his company’s Data Fabric and Agent 365 products were gaining traction among big companies that need an orchestration layer for AI agents and a unified way to access data stored in different systems and silos without having to migrate that data to a single platform. Snowflake CEO Sridhar Ramaswamy meanwhile thinks the deep expertise his company has is maintaining cloud-based data pools and controlling access to that data combined with newfound expertise in creating its own AI coding agents, make his company ideally suited to win the race to be the AI agent orchestrator. Ramaswamy told me his biggest fear is whether Snowflake can keep moving fast enough to realize this vision before OpenAI or Anthropic move down the stack—from AI agents into the data storage—potentially displacing Snowflake.

A couple more insights from Davos so far: while there is still a lot of fear about AI leading to widespread job displacement, it hasn’t shown up yet in economic data. In fact, Svenja Gudell, the chief economist at recruiting site Indeed, told me that while the tech sector has seen a huge decline in jobs since 2022, that trend predates the generative AI boom and is likely due to companies “right sizing” after the massive pandemic-era hiring boom rather than AI. And while many industries are not hiring much at the moment, Gudell says global macroeconomic and geopolitical uncertainty are to blame, not AI.

Finally, in a comment relevant to one of this week’s bigger AI news stories—that OpenAI is introducing ads to ChatGPT—Snabe, the Siemens chairman had an interesting answer to a question about how AI should be regulated. He said that rather than trying to regulate AI use cases—as the EU AI Act has done—governments should mandate more broadly that AI adhere to human values. And the one piece of regulation that would do more than anything to ensure this, he said, would be to ban AI business models based on advertising. Ad-based AI models will lead companies to optimize for user engagement with all of the negative consequences for mental health and democratic consensus that we’ve seen from social media, only far worse.

With that, here’s more AI news.

Jeremy Kahn

jeremy.kahn@fortune.com

@jeremyakahn

Beatice Nolan wrote the news and sub-sections of Eye on AI.

FORTUNE ON AI

Wave of defections from former OpenAI CTO Mira Murati’s $12 billion startup Thinking Machines shows cutthroat struggle for AI talent–by Jeremy Kahn and Sharon Goldman

ChatGPT tests ads as a new era of AI begins—by Sharon Goldman

A filmmaker deepfaked Sam Altman for his movie about AI. Then things got personal—by Beatrice Nolan

PwC’s global chairman says most leaders have forgotten ‘the basics’ as 56% are still getting ‘nothing’ out of AI adoption–by Diane Brady and Nick Lichtenberg

AI IN THE NEWS

EYE ON AI RESEARCH

Researchers say ChatGPT has a “silicon gaze” that amplifies global inequalities. A new study from the Oxford Internet Institute and the University of Kentucky analyzed over 20 million ChatGPT queries and found the AI systematically favors wealthier, Western regions, rating them as “smarter” and “more innovative” than poorer countries in the Global South. The researchers coined the term “silicon gaze” to describe how AI systems view the world through the lens of biased training data, mirroring historical power imbalances rather than providing objective answers. They argue these biases aren’t errors to be corrected, but structural features of AI systems that learn from data shaped by centuries of uneven information production, privileging places with extensive English-language coverage and strong digital visibility. The team has created a website–inequalities.ai–where people can explore how ChatGPT ranks their own neighborhood, city, or country across different lifestyle factors.

AI CALENDAR

Jan. 19-23: World Economic Forum, Davos, Switzerland.

Jan. 20-27: AAAI Conference on Artificial Intelligence, Singapore.

Feb. 10-11: AI Action Summit, New Delhi, India.

March 2-5: Mobile World Congress, Barcelona, Spain.

March 16-19: Nvidia GTC, San Jose, Calif.

BRAIN FOOD

Is Claude Code Anthropic’s ChatGPT moment? Anthropic has started the year with a viral moment most labs dream of. Despite Claude Code’s technical interface, the product has captured attention beyond the developer pool, with users building personal websites, analyzing health data, managing emails, and even monitoring tomato plants—all without writing a line of actual code. After several users pointed out that the product was much more of a general-use agent than the marketing and name suggested, the company launched Cowork—a more user-friendly version with a graphical interface built for non-developers.

Both Claude Code and Cowork’s ability to autonomously access, manipulate, and analyze files on a user’s computer has given many people a first taste of an AI agent that can actually take actions on their behalf, rather than just provide advice. Anthropic also saw a traffic lift as a result. Claude’s total web audience has more than doubled from December 2024, and its daily unique visitors on desktop are up 12% globally year-to-date compared with last month, according to data from market intelligence companies Similarweb and Sensor Tower published by The Wall Street Journal. But while some have hailed the products as the first step to getting a true AI personal assistant, the launch has also sparked concerns about job displacement and appears to put pressure on a few dozen startups that have built similar file management and automation tools.

FORTUNE AIQ: THE YEAR IN AI—AND WHAT’S AHEAD

Businesses took big steps forward on the AI journey in 2025, from hiring Chief AI Officers to experimenting with AI agents. The lessons learned—both good and bad–combined with the technology’s latest innovations will make 2026 another decisive year. Explore all of Fortune AIQ, and read the latest playbook below:

–The 3 trends that dominated companies’ AI rollouts in 2025.

–2025 was the year of agentic AI. How did we do?

–AI coding tools exploded in 2025. The first security exploits show what could go wrong.

–The big AI New Year’s resolution for businesses in 2026: ROI.

–Businesses face a confusing patchwork of AI policy and rules. Is clarity on the horizon?

Michael Carbonara amasses $1.7M to challenge Debbie Wasserman Schultz

Microsoft CEO Satya Nadella’s biggest AI bubble warning yet is a challenge to the Fortune 500

Blake Lively Says Justin Baldoni Humiliated Her in ‘It Ends With Us’ Birth Scene

Trending

-

Politics8 years ago

Politics8 years agoCongress rolls out ‘Better Deal,’ new economic agenda

-

Entertainment9 years ago

Entertainment9 years agoNew Season 8 Walking Dead trailer flashes forward in time

-

Politics9 years ago

Politics9 years agoPoll: Virginia governor’s race in dead heat

-

Politics8 years ago

Politics8 years agoIllinois’ financial crisis could bring the state to a halt

-

Entertainment8 years ago

Entertainment8 years agoThe final 6 ‘Game of Thrones’ episodes might feel like a full season

-

Entertainment9 years ago

Entertainment9 years agoMeet Superman’s grandfather in new trailer for Krypton

-

Business9 years ago

Business9 years ago6 Stunning new co-working spaces around the globe

-

Tech8 years ago

Tech8 years agoHulu hires Google marketing veteran Kelly Campbell as CMO