Business

GOP lawmakers in Indiana face ‘dangerous and intimidating process’ as Trump pushes redistricting

Published

2 days agoon

By

Jace Porter

Spencer Deery’s son was getting ready for school when someone tried to provoke police into swarming his home by reporting a fake emergency.

Linda Rogers said there were threats at her home and the golf course that her family has run for generations.

Jean Leising faced a pipe bomb scare that was emailed to local law enforcement.

The three are among roughly a dozen Republicans in the Indiana Senate who have seen their lives turned upside down while President Donald Trump pushes to redraw the state’s congressional map to expand the party’s power in the 2026 midterm elections.

It’s a bewildering and frightening experience for lawmakers who consider themselves loyal party members and never imagined they would be doing their jobs under the same shadow of violence that has darkened American political life in recent years. Leising described it as “a very dangerous and intimidating process.”

Redistricting is normally done once a decade after a new national census. Trump wants to accelerate the process in hopes of protecting the Republicans’ thin majority in the U.S. House next year. His allies in Texas, Missouri, Ohio and North Carolina have already gone along with his plans for new political lines.

Now Trump’s campaign faces its greatest test yet in a stubborn pocket of Midwestern conservatism. Although Indiana Gov. Mike Braun and the House of Representatives are on board, the proposal may fall short with senators who value their civic traditions and independence over what they fear would be short-term partisan gain.

“When you have the president of the United States and your governor sending signals, you want to listen to them,” said Rogers, who has not declared her position on the redistricting push. “But it doesn’t mean you’ll compromise your values.”

On Friday, Trump posted a list of senators who “need encouragement to make the right decision,” and he took to social media Saturday to say that if legislators “stupidly say no, vote them out of Office – They are not worthy – And I will be there to help!” Meanwhile, the conservative campaign organization Turning Point Action said it would spend heavily to unseat anyone who voted “no.”

Senators are scheduled to convene Monday to consider the proposal after months of turmoil. Resistance could signal the limits of Trump’s otherwise undisputed dominance of the Republican Party.

Threats shadow redistricting session

Deery considers himself lucky. The police in his hometown of West Lafayette knew the senator was a potential target for “swatting,” a dangerous type of hoax when someone reports a fake emergency to provoke an aggressive response from law enforcement.

So when Deery was targeted last month while his son and others were waiting for their daily bus ride to school, officers did not rush to the scene.

“You could have had SWAT teams driving in with guns out while there were kids in the area,” he said.

Deery was one of the first senators to publicly oppose the mid-decade redistricting, arguing it interferes with voters’ right to hold lawmakers accountable through elections.

“The country would be an uglier place for it,” he said just days after Vice President JD Vance visited the state in August, the first of two trips to talk with lawmakers about approving new maps.

Republican leaders in the Indiana Senate said in mid-November that they would not hold a vote on the matter because there was not enough support for it. Trump lashed out on social media, calling the senators weak and pathetic.

“Any Republican that votes against this important redistricting, potentially having an impact on America itself, should be PRIMARIED,” he wrote.

The threats against senators began shortly after that.

Sen. Sue Glick, a Republican who was first elected in 2010 and previously served as a local prosecutor, said she has never seen “this kind of rancor” in politics in her lifetime. She opposes redistricting, saying “it has the taint of cheating.”

Not even the plan’s supporters are immune to threats.

Republican Sen. Andy Zay said his vehicle-leasing business was targeted with a pipe bomb scare on the same day he learned that he would face a primary challenger who accuses Zay of being insufficiently conservative.

Zay, who has spent a decade in the Senate, believes the threat was related to his criticism of Trump’s effort to pressure lawmakers. But the White House has not heeded his suggestions to build public support for redistricting through a media campaign.

“When you push us around and into a corner, we’re not going to change because you hound us and threaten us,” Zay said. “For those who have made a decision to stand up for history and tradition, the tactics of persuasion do not embolden them to change their viewpoint.”

The White House did not respond to messages seeking a reaction to Zay’s comments.

Trump sees mixed support from Indiana

Trump easily won Indiana in all his presidential campaigns, and its leaders are unquestionably conservative. For example, the state was the first to restrict abortion after the U.S. Supreme Court overturned Roe v. Wade.

But Indiana’s political culture never became saturated with the sensibilities of Trump’s “Make America Great Again” movement. Some 21% of Republican voters backed Nikki Haley over Trump in last year’s presidential primary, even though the former South Carolina governor had already suspended her campaign two months earlier.

Trump also holds a grudge against Indiana’s Mike Pence, who served the state as a congressman and governor before becoming Trump’s first vice president. A devout evangelical, Pence loyally accommodated Trump’s indiscretions and scandals but refused to go along with Trump’s attempt on Jan. 6, 2021, to overturn Democrat Joe Biden’s victory.

“Mike Pence didn’t have the courage to do what was necessary,” Trump posted online after an angry crowd of his supporters breached the U.S. Capitol.

Pence has not taken a public stance on his home state’s redistricting effort. But the governor before him, Republican Mitch Daniels, recently said it was “clearly wrong.”

The proposed map, which was released Monday and approved by the state House on Friday, attempts to dilute the influence of Democratic voters in Indianapolis by splitting up the city. Parts of the capital would be grafted onto four different Republican-leaning districts, one of which would stretch all the way south to the border with Kentucky.

Rogers, the senator whose family owns the golf course, declined to discuss her feelings about the redistricting. A soft-spoken business leader from the suburbs of South Bend, she said she was “very disappointed” about the threats.

On Monday, Rogers will be front and center as a member of the Senate Elections Committee, the first one in that chamber to consider the redistricting bill.

“We need to do things in a civil manner and have polite discourse,” she said.

You may like

Business

The problem with ‘human in the loop’ AI? Often, it’s the humans

Published

2 minutes agoon

December 9, 2025By

Jace Porter

Welcome to Eye on AI. In this edition…AI is outperforming some professionals…Google plans to bring ads to Gemini…leading AI labs team up on AI agent standards…a new effort to give AI models a longer memory…and the mood turns on LLMsand AGI.

Greetings from San Francisco, where we are just wrapping up Fortune Brainstorm AI. On Thursday, we’ll bring you a roundup of insights from the conference. But today, I want to talk about some notable studies from the past few weeks with potentially big implications for the business impact AI may have.

First, there was a study from the AI evaluations company Vals AI that pitted several legal AI applications as well as ChatGPT against human lawyers on legal research tasks. All of the AI applications beat the average human lawyers (who were allowed to use digital legal search tools) in drafting legal research reports across three criteria: accuracy, authoritativeness, and appropriateness. The lawyers’ aggregate median score was 69%, while ChatGPT scored 74%, Midpage 76%, Alexi 77%, and Counsel Stack, which had the highest overall score, 78%.

One of the more intriguing findings is that for many question types, it was the generalist ChatGPT that was the most accurate, beating out the more specialized applications. And while ChatGPT lost points for authoritativeness and appropriateness, it still topped the human lawyers across those dimensions.

The study has been faulted for not testing some of the better-known and most widely adopted legal AI research tools, such as Harvey, Legora, CoCounsel from Thompson Reuters, or LexisNexis Protégé, and for only testing ChatGPT among the frontier general-purpose models. Still, the findings are notable and comport with what I’ve heard anecdotally from lawyers.

A little while ago I had a conversation with Chris Kercher, a litigator at Quinn Emanuel who founded that firm’s data and analytics group. Quinn Emanuel has been using Anthropic’s general purpose AI model Claude for a lot of tasks. (This was before Anthropic’s latest model, Claude Opus 4.5, debuted.) “Claude Opus 3 writes better than most of my associates,” Kercher told me. “It just does. It is clear and organized. It’s a great model.” He said he is “constantly amazed” by what LLMs can do, finding new issues, strategies, and tactics that he can use to argue cases.

Kercher said that AI models have allowed Quinn Emanuel to “invert” its prior work processes. In the past, junior lawyers—who are known as associates—used to spend days researching and writing up legal memos, finding citations for every sentence, before presenting those memos to more senior lawyers who would incorporate some of that material into briefs or arguments that would actually be presented in court. Today, he says, AI is used to generate drafts that Kercher said are by and large better, in a fraction of the time, and then these drafts are given to associates to vet. The associates are still responsible for the accuracy of the memos and citations—just as they always were—but now they are fact-checking the AI and editing what it produces, not performing the initial research and drafting, he said.

He said that the most experienced, senior lawyers often get the most value out of working with AI, because they have the expertise to know how to craft the perfect prompt, along with the professional judgment and discernment to quickly assess the quality of the AI’s response. Is the argument the model has come up with sound? Is it likely to work in front of a particular judge or be convincing to a jury? These sorts of questions still require judgment that comes from experience, Kercher said.

Ok, so that’s law, but it likely points to ways in which AI is beginning to upend work within other “knowledge industries” too. Here at Brainstorm AI yesterday, I interviewed Michael Truell, the cofounder and CEO of hot AI coding tool Cursor. He noted that in a University of Chicago study looking at the effects of developers using Cursor, it was often the most experienced software engineers who saw the most benefit from using Cursor, perhaps for some of the same reasons Kercher says experienced lawyers get the most out of Claude—they have the professional experience to craft the best prompts and the judgment to better assess the tools’ outputs.

Then there was a study out on the use of generative AI to create visuals for advertisements. Business professors at New York University and Emory University tested whether advertisements for beauty products created by human experts alone, created by human experts and then edited by AI models, or created entirely by AI models were most appealing to prospective consumers. They found the ads that were entirely AI generated were chosen as the most effective—increasing clickthrough rates in a trial they conducted online by 19%. Meanwhile, those created by humans and edited by AI were actually less effective than those simply created by human experts with no AI intervention. But, critically, if people were told the ads were AI-generated, their likelihood of buying the product declined by almost a third.

Those findings present a big ethical challenge to brands. Most AI ethicists think people should generally be told when they are consuming content generated by AI. And advertisers do need to negotiate various Federal Trade Commission rulings around “truth in advertising.” But many ads already use actors posing in various roles without needing to necessarily tell people that they are actors—or the ads do so only in very fine print. How different is AI-generated advertising? The study seems to point to a world where more and more advertising will be AI-generated and where disclosures will be minimal.

The study also seems to challenge the conventional wisdom that “centaur” solutions (which combine the strengths of humans and those of AI in complementary ways) will always perform better than either humans or AI alone. (Sometimes this is condensed to the aphorism “AI won’t take your job. A human using AI will take your job.”) A growing body of research seems to suggest that in many areas, this simply isn’t true. Often, the AI on its own actually produces the best results.

But it is also the case that whether centaur solutions work well depends tremendously on the exact design of the human-AI interaction. A study on human doctors using ChatGPT to aid diagnosis, for example, found that humans working with AI could indeed produce better diagnoses than either doctors or ChatGPT alone—but only if ChatGPT was used to render an initial diagnosis and human doctors, with access to the ChatGPT diagnosis, then gave a second opinion. If that process was reversed, and ChatGPT was asked to render the second opinion on the doctor’s diagnosis, the results were worse—and in fact, the second-best results were just having ChatGPT provide the diagnosis. In the advertising study, it would have been good if the researchers had looked at what happens if AI generates the ads and then human experts edit them.

But in any case, momentum towards automation—often without a human in the loop—is building across many fields.

On that happy note, here’s more AI news.

Jeremy Kahn

jeremy.kahn@fortune.com

@jeremyakahn

FORTUNE ON AI

Exclusive: Glean hits $200 million ARR, up from $100 million 9 months back—by Allie Garfinkle

Cursor developed an internal AI help desk that handles 80% of its employees’ support tickets, says the $29 billion startup’s CEO —by Beatrice Nolan

HP’s chief commercial officer predicts the future will include AI-powered PCs that don’t share data in the cloud —by Nicholas Gordon

OpenAI COO Brad Lightcap says code red will ‘force’ the company to focus, as the ChatGPT maker ramps up enterprise push —by Beatrice Nolan

AI IN THE NEWS

Trump allows Nvidia to sell H200 GPUs to China, but China may limit adoption. President Trump signaled he would allow exports of Nvidia’s high-end H200 chips to approved Chinese customers. Nvidia CEO Jensen Huang has called China a $50 billion annual sales opportunity for the company, but Beijing wants to limit the reliance of its companies on U.S.-made chips, and Chinese regulators are weighing an approval system that would require buyers to justify why domestic chips cannot meet their needs. They may even bar the public sector from purchasing H200s. But Chinese companies often prefer to use Nvidia chips and even train their models outside of China to get around U.S. export controls. Trump’s decision has triggered political backlash in Washington, with a bipartisan group of senators seeking to block such exports, though the legislation’s prospects remain uncertain. Read more from the Financial Timeshere.

Trump plans executive order on national AI standard, aimed at pre-empting state-level regulation. President Trump said he will issue an executive order this week creating a single national artificial-intelligence standard, arguing that companies cannot navigate a patchwork of 50 different state approval regimes, Politico reported. The move follows a leaked November draft order that sought to block state AI laws and reignited debate over whether federal rules should override state and local regulations. A previous attempt to add AI-preemption language to the year-end defense bill collapsed last week, prompting the administration to return to pursuing the policy through executive action instead.

Google plans to bring advertising to its Gemini chatbot in 2026. That’s according to a report in Adweek that cited information from two unnamed Google advertising clients. The story said that details on format, pricing, and testing remained unclear. It also said the new ad format for Gemini is separate from ads that will appear alongside “AI Mode” searches in Google Search.

Former Databricks AI head’s new AI startup valued at $4.5 billion in seed round. Unconventional AI, a startup cofounded by former Databricks AI head Naveen Rao, raised $475 million in a seed round led by Andreessen Horowitz and Lightspeed Venture Partners at a valuation of $4.5 billion—just two months after its founding, Bloomberg News reported. The company aims to build a novel, more energy-efficient computing architecture to power AI workloads.

Anthropic forms partnership with Accenture to target enterprise customers. Anthropic and Accenture have formed a three-year partnership that makes Accenture one of Anthropic’s largest enterprise customers and aims to help businesses—many of which remain skeptical—realize tangible returns from AI investments, the Wall Street Journalreported. Accenture will train 30,000 employees on Claude and, together with Anthropic, launch a dedicated business group targeting highly regulated industries and embedding engineers directly with clients to accelerate adoption and measure value.

OpenAI, Anthropic, Google, and Microsoft team up for new standard for agentic AI. The Linux Foundation is organizing a group called the Agentic Artificial Intelligence Foundation with participation from major AI companies, including OpenAI, Anthropic, Google, and Microsoft. It aims to create shared open-source standards that allow AI agents to reliably interact with enterprise software. The group will focus on standardizing key tools such as the Model Context Protocol, OpenAI’s Agents.md format, and Block’s Goose agent, aiming to ensure consistent connectivity, security practices, and contribution rules across the ecosystem. CIOs increasingly say common protocols are essential for fixing vulnerabilities and enabling agents to function smoothly in real business environments. Read more here from The Information.

EYE ON AI RESEARCH

Google has created a new architecture to give AI models longer-term memory. The architecture, called Titans—which Google first debuted at the start of 2025 and which Eye on AI covered at the time—is paired with a framework named MIRAS that is designed to give AI something closer to long-term memory. Instead of forgetting older details when its short memory window fills up, the system uses a separate memory module that continually updates itself. The system assesses how surprising any new piece of information is compared to what it has stored in its long-term memory, updating the memory module only when it encounters high surprise. In testing, Titans with MIRAS performed better than older models on tasks that require reasoning over long stretches of information, suggesting it could eventually help with things like analyzing complex documents, doing in-depth research, or learning continuously over time. You can read Google’s research blog here.

AI CALENDAR

Jan. 6: Fortune Brainstorm Tech CES Dinner. Apply to attend here.

Jan. 19-23: World Economic Forum, Davos, Switzerland.

Feb. 10-11: AI Action Summit, New Delhi, India.

BRAIN FOOD

At NeurIPS, the mood shifts against LLMs as a path to AGI. The Information reported that a growing number of researchers attending NeurIPS, the AI research field’s most important conference—which took place last week in San Diego (with satellite events in other cities)—are increasingly skeptical of the idea that large language models (LLMs) will ever lead to artificial general intelligence (AGI). Instead, they feel the field may need an entirely new kind of AI architecture to advance to more human-like AI that can continually learn, can learn efficiently from fewer examples, and can extrapolate and analogize concepts to previously unseen problems.

Figures such as Amazon’s David Luan and OpenAI co-founder Ilya Sutskever contend that current approaches, including large-scale pre-training and reinforcement learning, fail to produce models that truly generalize, while new research presented at the conference explores self-adapting models that can acquire new knowledge on the fly. Their skepticism contrasts with the view of leaders like Anthropic CEO Dario Amodei and OpenAI’s Sam Altman, who believe scaling current methods can still achieve AGI. If critics are correct, it could undermine billions of dollars in planned investment in existing training pipelines.

Business

OpenAI COO Brad Lightcap says code red will ‘force’ focus, as ChatGPT maker ramps up enterprise push

Published

33 minutes agoon

December 9, 2025By

Jace Porter

OpenAI’s Chief Operating Officer Brad Lightcap says the company’s recent ‘code red’ alert will force the $500 billion startup to “focus” as it faces heightened competition in the technical capabilities of its AI models and in making inroads among business customers.

“I think a big part of it is really just starting to push on the rate at which we see improvement in focus areas within the models,” Lightcap said on stage at Fortune’s Brainstorm AI conference in San Francisco on Tuesday. “What you’re going to see, even starting fairly soon, will be a really exciting series of things that we release.”

Last week, in an internal memo shared with employees, OpenAI CEO Sam Altman said he was declaring a “Code Red” alarm within the organization, according to reports from The Information and the Wall Street Journal. Altman told employees it was “a critical time for ChatGPT,” the company’s flagship product, and that OpenAI would delay other initiatives, including its advertising plans to focus on improving the core product.

Speaking at the event on Tuesday, Lightcap framed the code red alert as a standard practice that many businesses occasionally undertake to sharpen focus, and not an OpenAI specific action. But Lightcap acknowledged the importance of the move at OpenAI at this moment, given the growth in headcount and projects over the past couple of years.

“It’s a way of forcing company focus,” Lightcap said. “For a company that’s doing a bazillion things, it’s actually quite refreshing.”

He continued: “We will come out of it. I think what comes out of it that way will be really exciting.”

In addition to the increasing pressure from Google and its Gemini family of LLM models, OpenAI is facing heightened competition from rival AI lab Anthropic among enterprise customers. Anthropic has emerged as a favorite for businesses, particularly software engineers, due to its popular coding tools and reputation for AI safety.

Lightcap told the audience that the company was focused on pushing enterprise adoption of AI tools. He said OpenAI was developing two main levels of enterprise products: user-focused solutions like ChatGPT, which boost team productivity, and lower-level APIs for developers to build custom applications. However, he noted the company currently lacks offerings in the middle tier, such as tools are user-directed but also have deep integration into enterprise systems, like AI coding assistants that employees can direct while tapping into the organization’s code bases. He said the company was also prioritizing further investments to enable enterprises to tackle longer-term, complex tasks using AI.

Business

AI isn’t the reason you got laid off (or not hired), top staffing agency says

Published

1 hour agoon

December 9, 2025By

Jace Porter

AI is not the main reason most people are losing their jobs right now; weak demand, economic headwinds, and skill mismatches are doing more of the damage, according to the latest quarterly outlook from ManpowerGroup, one of the largest staffing agencies in the world. While automation and AI are surely reshaping job descriptions and long‑term hiring plans, the first-quarter 2026 employment outlook survey suggests workers without the right mix of technical and human skills are far more exposed than those whose capabilities match what employers say they need.

ManpowerGroup claims its Employment Outlook Survey, launched in 1962, is the most extensive forward-looking survey of its kind, unparalleled in size, scope, and longevity, and one of the most trusted indicators of labor market trends worldwide. Looking ahead to the turn of the year, the survey says employers around the globe still plan to hire, but at a slower pace and with fewer additions to headcount than earlier in the pandemic recovery.

Globally, 40% of organizations expect to increase staffing in the first quarter and another 40% plan to keep headcount unchanged, yet the typical company now anticipates adding only eight workers, down steadily from mid‑2025 levels. Large enterprises with 5,000 or more employees have cut their planned hiring roughly in half since the second quarter of 2025, underscoring just how much large employers are tightening belts even as they keep recruiting in priority areas.

Regional patterns are uneven. North America’s employment outlook has dropped sharply year on year to one of its weakest readings in nearly five years, while South and Central America and the Asia Pacific–Middle East region report comparatively stronger optimism. Europe’s outlook is muted, with only a small decline from last year, suggesting that many employers there are in wait‑and‑see mode rather than embarking on aggressive expansion or deep cuts.

Talent shortage, not job shortage

Despite cooling hiring volumes, 72% of organizations say they still struggle to find skilled talent, only slightly less than a year ago, reinforcing the idea that there is a talent shortage, not a work shortage. Europe reports the most acute pressure, with nearly three‑quarters of employers citing difficulty filling roles, while South and Central America report the least, though two‑thirds of companies in that region are still affected.

The survey suggests shortages are particularly severe in the information sector and in public services such as health and social care. In those fields, three‑quarters of organizations report difficulty finding the right people, even as some workers in adjacent roles complain of layoffs and stalled careers, highlighting the growing gap between available workers and the specific skills employers require.

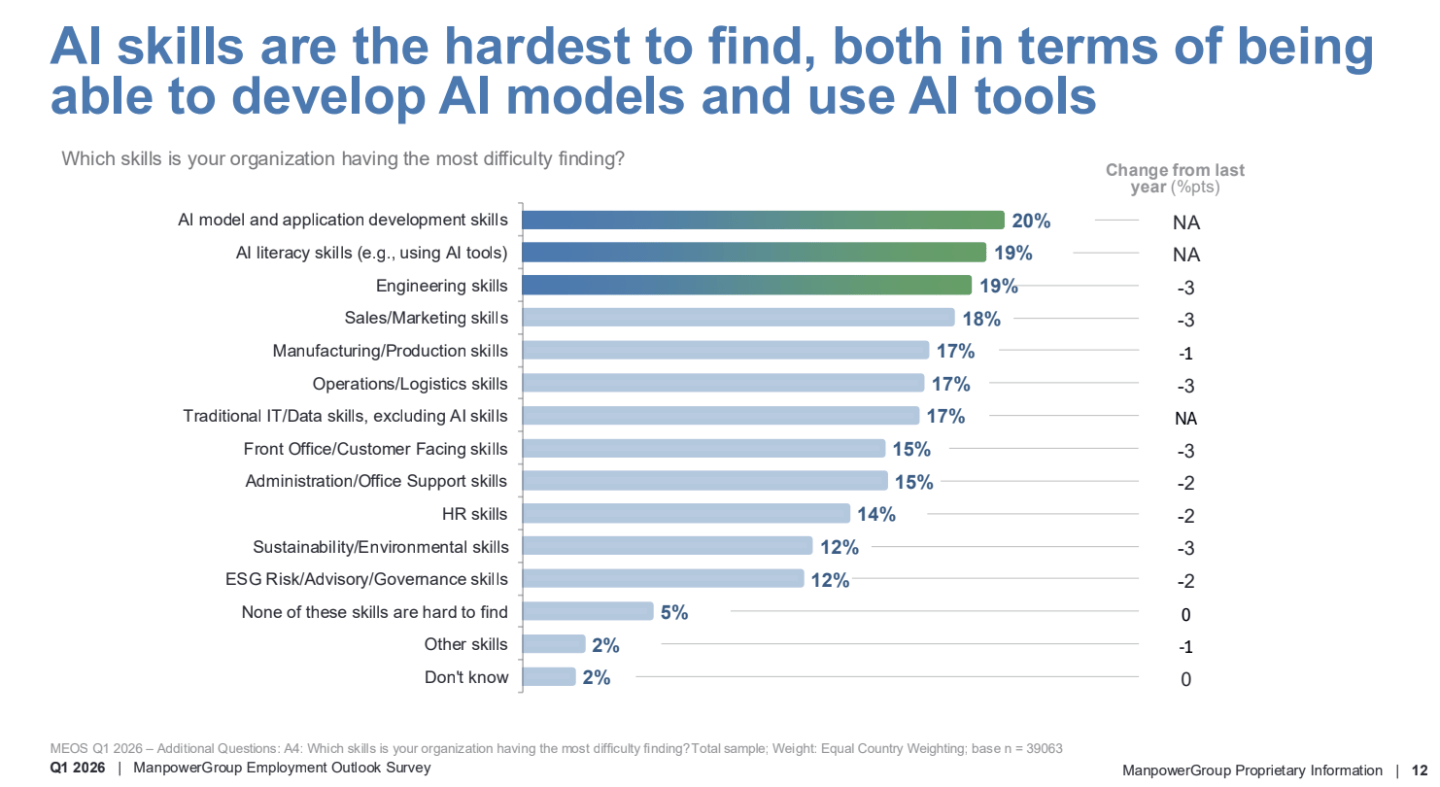

AI skills are scarce, but AI isn’t the axe

If AI were the primary driver of layoffs, employers would not simultaneously report that the hardest capabilities to find are AI‑related. Yet 20% of organizations say AI model and application development skills are the most difficult to hire for, and another 19% say the same about AI literacy, meaning the ability to use AI tools effectively; in Asia-Pacific and the Middle East, these shortages are even more pronounced.

At the same time, when firms do reduce staff, they mostly blame the economy, not automation. Employers who expect to downsize cite economic challenges, weaker demand, market shifts, and reorganizations as the top reasons for cuts, with automation and efficiency improvements playing a secondary role and affecting only certain roles or functions. Changes in required skills appear at the bottom of the list of stated reasons for staff reductions, suggesting that technology is transforming jobs more often than it is eliminating them outright.

Skills mismatch at the heart of layoffs

The report points to a widening skills mismatch as a central fault line in the labor market. Employers say the skills needed for their services have changed, creating new roles in some areas while making other roles redundant, and they struggle to rehire for positions that require capabilities many displaced workers do not yet possess. For organizations that are adding staff, nearly a quarter say advancements in technology are driving that hiring, but they need workers with the right expertise to fill those tech‑driven roles.

Courtesy of ManpowerGroup

Outside of hard technical skills, hiring managers are clear about what they want: Communication, collaboration, and teamwork top the list of soft skills, followed by professionalism, adaptability, and critical thinking. Digital literacy is also rising in importance, especially in information‑heavy sectors, making it harder for workers who lack basic comfort with technology to compete even for nontechnical jobs.

Rather than replacing workers with machines outright, many employers are trying to bridge the gap by retraining the people they already have. Upskilling and reskilling remain the most common strategies for dealing with talent shortages, ahead of raising wages, turning to contractors, or using AI and automation explicitly to shrink headcount.

Larger companies are particularly invested in this approach, with the share of organizations prioritizing upskilling rising along with firm size. Employers in every major region report plans to train workers for new tools and workflows, reflecting the recognition that technology’s rapid advance will demand continuous learning rather than one‑time restructurings.

The big grain of salt for this survey is that it is limited to the next quarter. In the case of a worse long-term downturn, all bets could be off about just how many jobs could be automated with AI tools. This question is beyond the scope of the Manpower survey, but Goldman Sachs economists tackled the issue in October, writing, “History also suggests that the full consequences of AI for the labor market might not become apparent until a recession hits.” David Mericle and Pierfrancesco Mei noted that job growth has been modest in recent quarters while GDP growth has been robust, and that is “likely to be normal to some degree in the years ahead,” noting an aging society and lower immigration. The result is an oxymoron: “jobless growth.”

Until the era of jobless growth fully arrives, though, the Manpower survey suggests that growth will consist of hiring humans who have the right AI skills, whatever those turn out to be.

For this story, Fortune journalists used generative AI as a research tool. An editor verified the accuracy of the information before publishing.

The problem with ‘human in the loop’ AI? Often, it’s the humans

Nazi-Loving Misogynist Nick Fuentes Tells Piers Morgan He’s Still a Virgin at 27

UK fashion manufacturers margins recover, but overall picture is mixed – report

Trending

-

Politics8 years ago

Politics8 years agoCongress rolls out ‘Better Deal,’ new economic agenda

-

Entertainment8 years ago

Entertainment8 years agoNew Season 8 Walking Dead trailer flashes forward in time

-

Politics8 years ago

Politics8 years agoPoll: Virginia governor’s race in dead heat

-

Entertainment8 years ago

Entertainment8 years agoThe final 6 ‘Game of Thrones’ episodes might feel like a full season

-

Entertainment8 years ago

Entertainment8 years agoMeet Superman’s grandfather in new trailer for Krypton

-

Politics8 years ago

Politics8 years agoIllinois’ financial crisis could bring the state to a halt

-

Business8 years ago

Business8 years ago6 Stunning new co-working spaces around the globe

-

Tech8 years ago

Tech8 years agoHulu hires Google marketing veteran Kelly Campbell as CMO