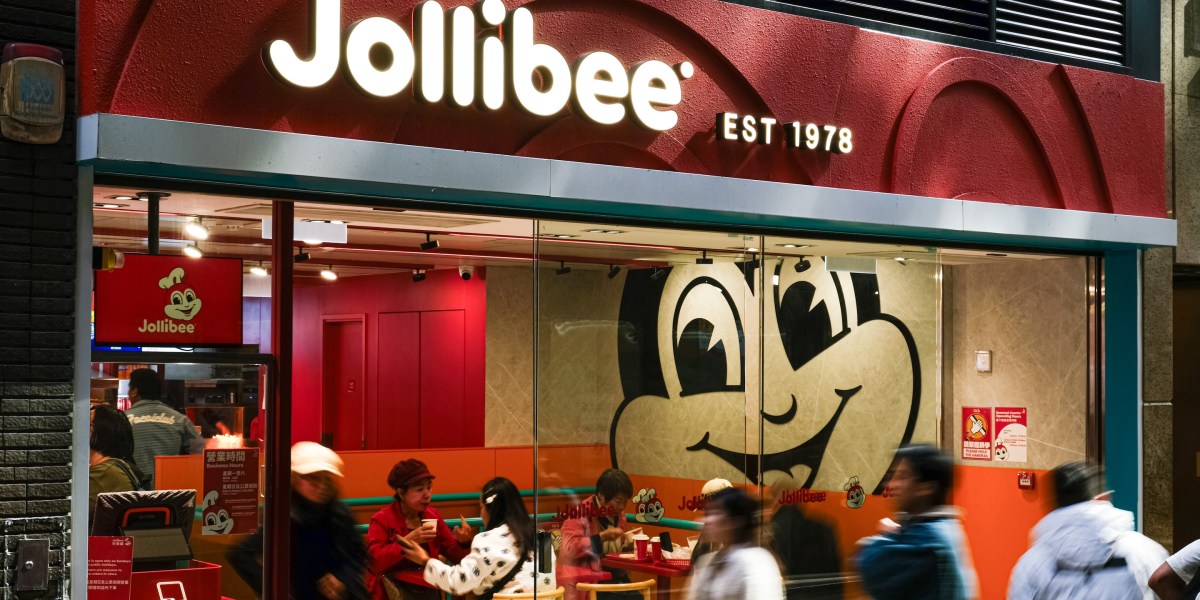

Good morning. Chickenjoy—its crispy, juicy fried chicken—and Jolly Spaghetti are signature menu items at Jollibee, a Filipino fast-food chain that is building a growing fan base in the U.S. Now, the company is setting its sights on Wall Street.

The Philippines-based Jollibee Foods Corporation (JFC), the restaurant’s parent company, disclosed earlier this month that it plans to spin off its international operations and pursue a U.S. initial public offering for that business. The contemplated spin-off and listing are targeted for late 2027, leaving “quite a bit of time ahead of us for the work to be done,” Jollibee Global CFO Richard Shin said during a Jan. 14 media roundtable.

JFC, which includes restaurant brands such as Smashburger and The Coffee Bean & Tea Leaf, is currently traded as a single group on the Philippine Stock Exchange and operates in 33 countries. Over the past 15 quarters, JFC’s international network has posted a 26.7% compound annual growth rate, outpacing the group’s overall 15.1% rate of expansion. The separation reflects increasingly distinct strategic profiles for the domestic and international businesses, Shin said.

In March 2025, Jollibee launched its first U.S. franchising program. After opening its first North American location in 1998 in Daly City, California, the brand has since expanded to more than 100 locations across the U.S. and Canada as of early 2026.

Why go the route of a U.S. IPO? “I think there’s a fact that we can all agree on: the U.S. capital markets have deep investor-based experience in valuing global consumer and restaurant growth companies,” Shin said on the call.

Many such companies are still growing into their potential yet are often rewarded with higher multiples and valuations, he said. While that outcome is not guaranteed for JFC, a U.S. listing offers greater capital depth, liquidity, and broader analyst coverage, with any final decision subject to valuation and required approvals, he added.

The IPO market in the U.S. is heating up again, Fortune’s Jeff John Roberts writes in a new feature article. “While 2026 will almost certainly not match the banner year of 1999, which saw 476 companies go public, investors should have far more choices than they did four years ago, when just 38 firms held an IPO,” he writes.

Shin also framed the separation of JFC in terms of simplifying how investors assess the corporation, noting the group includes businesses at different stages of their life cycles, with varying returns and opportunities. Distinct domestic and international entities, he suggested, could offer investors clearer, more targeted investment options as the strategic profiles of the two segments continue to diverge.

Reasons for pursuing the separation include improved transparency, discipline in capital allocation, execution against the growth strategy, and the ability to attract an investor base aligned with the risk–return profile of each business rather than being judged solely on short-term financial metrics, he said.

“The transaction is aligned with the Jollibee Group’s long-term value creation strategy,” Shin said.

With its eyes on Wall Street, Jollibee is betting that global taste and investor appetite, will be on its side.

Sheryl Estrada

sheryl.estrada@fortune.com

Leaderboard

Helen Cai was appointed senior executive vice president and CFO of Barrick Mining Corporation (NYSE: B), effective March 1, following the departure of long-serving finance chief Graham Shuttleworth, who will be leaving the company after its year-end results. Cai has served on Barrick’s board since November 2021 and brings more than 20 years of experience in equity research, corporate finance, capital markets, and M&A at firms across the mining, industrial, and technology sectors, primarily with Goldman Sachs and China International Capital Corporation.

Meredith Peck was named CFO of Zekelman Industries, the largest independent steel pipe and tube manufacturer in North America. Peck succeeds Mike Graham, who will retire on May 15 following a planned transition period. She brings more than 20 years of financial leadership experience to Zekelman Industries and most recently served as CFO for COTSWORKS, Inc., after earlier roles as the company’s controller and then vice president of finance and administration. Earlier in her career, Peck held senior leadership roles at KeyBank and began her career in public accounting at PwC, and she is also a former U.S. Coast Guard officer.

Big Deal

In a blog post on Sunday, OpenAI CFO Sarah Friar provided an update on the tech giant, including its revenue. In 2023, revenue reached $2 billion in annual recurring revenue; it rose to $6 billion in 2024 and jumped to more than $20 billion in 2025.

This revenue growth closely tracked an expansion in computing capacity. OpenAI’s computing capacity rose from 0.2 gigawatts (GW) in 2023 to 0.6 GW in 2024 and about 1.9 GW in 2025.

Friar writes: “Compute is the scarcest resource in AI. Three years ago, we relied on a single compute provider. Today, we are working with providers across a diversified ecosystem. That shift gives us resilience and, critically, compute certainty.”

In an accompanying LinkedIn post, Friar said that from a finance perspective, demand is real and growing at rates never seen by any company previously, and that customers are paying in proportion to the value delivered. She added that capital is being deployed deliberately into the constraints that actually matter, especially compute.

Going deeper

ACCA (the Association of Chartered Certified Accountants) and IMA (Institute of Management Accountants) have published a Global Economic Conditions Survey, based on the results of their Q4 2025 poll. Members from around the world share their views on the macroeconomic environment.

Confidence among CFOs improved somewhat, but remained below its historic average, and the key indicators point to caution at their firms, according to the findings. Accountants flagged economic pressure, cyber disruption, and geopolitical uncertainty as the top risk priorities, underscoring that risks are increasingly complex and interlinked.

“Accountants remain cautious entering 2026, amid a highly uncertain global backdrop,” Jonathan Ashworth, chief economist of ACCA, said in a statement. “The global economy performed better than expected in 2025 and looks set to remain resilient in 2026 amid recent monetary easing by central banks, stock market gains, supportive fiscal policies in key countries, and the ongoing global AI boom.” However, there remains significant uncertainty, amid a wide range of risks, “not least on the geopolitical front, which are more heavily skewed to the downside,” he said.

Overheard

“We are entering an IPO ‘mega‑cycle’ that we expect will be defined by unprecedented deal volume and IPO sizes.”

—Goldman Sachs’ global co-head of investment banking, Kim Posnett, recently told Fortune. Posnett discussed how she sees the current business environment and the most significant developments in 2026 in terms of AI, the IPO market, and M&A activity. Posnett, named among the leaders on Fortune’s Most Powerful Women list, is one of the bank’s top dealmakers and also serves as vice chair of the Firmwide Client Franchise Committee and as a member of the Management Committee.

Politics8 years ago

Politics8 years ago

Entertainment9 years ago

Entertainment9 years ago

Politics9 years ago

Politics9 years ago

Politics8 years ago

Politics8 years ago

Entertainment8 years ago

Entertainment8 years ago

Entertainment9 years ago

Entertainment9 years ago

Business9 years ago

Business9 years ago

Tech8 years ago

Tech8 years ago