Business

AI isn’t the reason you got laid off (or not hired), top staffing agency says

Published

2 hours agoon

By

Jace Porter

AI is not the main reason most people are losing their jobs right now; weak demand, economic headwinds, and skill mismatches are doing more of the damage, according to the latest quarterly outlook from ManpowerGroup, one of the largest staffing agencies in the world. While automation and AI are surely reshaping job descriptions and long‑term hiring plans, the first-quarter 2026 employment outlook survey suggests workers without the right mix of technical and human skills are far more exposed than those whose capabilities match what employers say they need.

ManpowerGroup claims its Employment Outlook Survey, launched in 1962, is the most extensive forward-looking survey of its kind, unparalleled in size, scope, and longevity, and one of the most trusted indicators of labor market trends worldwide. Looking ahead to the turn of the year, the survey says employers around the globe still plan to hire, but at a slower pace and with fewer additions to headcount than earlier in the pandemic recovery.

Globally, 40% of organizations expect to increase staffing in the first quarter and another 40% plan to keep headcount unchanged, yet the typical company now anticipates adding only eight workers, down steadily from mid‑2025 levels. Large enterprises with 5,000 or more employees have cut their planned hiring roughly in half since the second quarter of 2025, underscoring just how much large employers are tightening belts even as they keep recruiting in priority areas.

Regional patterns are uneven. North America’s employment outlook has dropped sharply year on year to one of its weakest readings in nearly five years, while South and Central America and the Asia Pacific–Middle East region report comparatively stronger optimism. Europe’s outlook is muted, with only a small decline from last year, suggesting that many employers there are in wait‑and‑see mode rather than embarking on aggressive expansion or deep cuts.

Talent shortage, not job shortage

Despite cooling hiring volumes, 72% of organizations say they still struggle to find skilled talent, only slightly less than a year ago, reinforcing the idea that there is a talent shortage, not a work shortage. Europe reports the most acute pressure, with nearly three‑quarters of employers citing difficulty filling roles, while South and Central America report the least, though two‑thirds of companies in that region are still affected.

The survey suggests shortages are particularly severe in the information sector and in public services such as health and social care. In those fields, three‑quarters of organizations report difficulty finding the right people, even as some workers in adjacent roles complain of layoffs and stalled careers, highlighting the growing gap between available workers and the specific skills employers require.

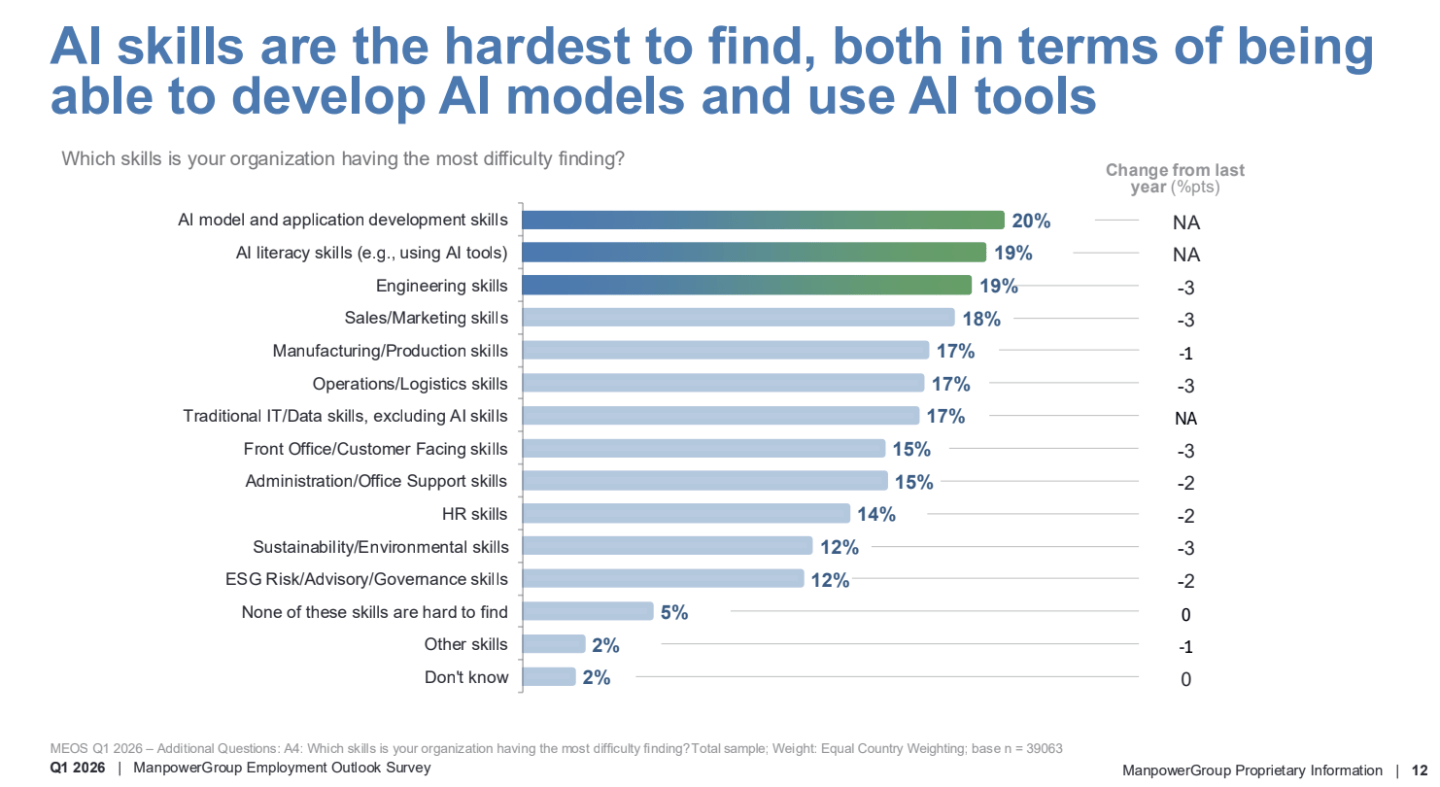

AI skills are scarce, but AI isn’t the axe

If AI were the primary driver of layoffs, employers would not simultaneously report that the hardest capabilities to find are AI‑related. Yet 20% of organizations say AI model and application development skills are the most difficult to hire for, and another 19% say the same about AI literacy, meaning the ability to use AI tools effectively; in Asia-Pacific and the Middle East, these shortages are even more pronounced.

At the same time, when firms do reduce staff, they mostly blame the economy, not automation. Employers who expect to downsize cite economic challenges, weaker demand, market shifts, and reorganizations as the top reasons for cuts, with automation and efficiency improvements playing a secondary role and affecting only certain roles or functions. Changes in required skills appear at the bottom of the list of stated reasons for staff reductions, suggesting that technology is transforming jobs more often than it is eliminating them outright.

Skills mismatch at the heart of layoffs

The report points to a widening skills mismatch as a central fault line in the labor market. Employers say the skills needed for their services have changed, creating new roles in some areas while making other roles redundant, and they struggle to rehire for positions that require capabilities many displaced workers do not yet possess. For organizations that are adding staff, nearly a quarter say advancements in technology are driving that hiring, but they need workers with the right expertise to fill those tech‑driven roles.

Courtesy of ManpowerGroup

Outside of hard technical skills, hiring managers are clear about what they want: Communication, collaboration, and teamwork top the list of soft skills, followed by professionalism, adaptability, and critical thinking. Digital literacy is also rising in importance, especially in information‑heavy sectors, making it harder for workers who lack basic comfort with technology to compete even for nontechnical jobs.

Rather than replacing workers with machines outright, many employers are trying to bridge the gap by retraining the people they already have. Upskilling and reskilling remain the most common strategies for dealing with talent shortages, ahead of raising wages, turning to contractors, or using AI and automation explicitly to shrink headcount.

Larger companies are particularly invested in this approach, with the share of organizations prioritizing upskilling rising along with firm size. Employers in every major region report plans to train workers for new tools and workflows, reflecting the recognition that technology’s rapid advance will demand continuous learning rather than one‑time restructurings.

The big grain of salt for this survey is that it is limited to the next quarter. In the case of a worse long-term downturn, all bets could be off about just how many jobs could be automated with AI tools. This question is beyond the scope of the Manpower survey, but Goldman Sachs economists tackled the issue in October, writing, “History also suggests that the full consequences of AI for the labor market might not become apparent until a recession hits.” David Mericle and Pierfrancesco Mei noted that job growth has been modest in recent quarters while GDP growth has been robust, and that is “likely to be normal to some degree in the years ahead,” noting an aging society and lower immigration. The result is an oxymoron: “jobless growth.”

Until the era of jobless growth fully arrives, though, the Manpower survey suggests that growth will consist of hiring humans who have the right AI skills, whatever those turn out to be.

For this story, Fortune journalists used generative AI as a research tool. An editor verified the accuracy of the information before publishing.

You may like

Business

Jamie Dimon taps Jeff Bezos, Michael Dell and Ford CEO Jim Farley to advise JPMorgan’s $1.5 trillion national-security initiative

Published

22 minutes agoon

December 9, 2025By

Jace Porter

Jamie Dimon’s JPMorganChase just unveiled a list of business leaders and retired government officials that will make up a new advisory team to guide the investment bank’s $1.5 trillion national-security initiative.

The external advisory council, announced on Monday, features prominent tech business leaders Jeff Bezos and Michael Dell as well as Ford CEO Jim Farley, alongside a number of national security and defense experts.

JPMorganChase first announced its national-security push—coined the Security and Resilience Initiative (SRI)—in October by saying it would first invest up to $10 billion in direct equity and venture capital to companies it characterizes as paramount to U.S. national security.

Dimon also said on Monday he poached one of Warren Buffet’s personally selected investors to head the investment fund starting in January.

Both of the announcements are initial steps to realizing the company’s national-security pledge, which will span the next 10 years.

The council will be chaired by Dimon himself, and will “convene periodically” to “help spur growth and innovation in industries critical to the United States’ national security and economic resiliency,” the company said in its press release.

“We are humbled by the extraordinary group of leaders and public servants who have agreed to join our efforts as senior advisors to the SRI,” Dimon said in the Monday announcement. “With their help, we can ensure that our firm takes a holistic approach to addressing key issues facing the United States—supporting companies across all sizes and development stages through advice, financing and equity capital.”

Here is a list of the advisory council members:

Business leaders

- Jeff Bezos, executive chairman and founder of Amazon and founder of Blue Origin

Bezos previously partnered with Dimon and Warren Buffett on the not-for-profit Haven health‑care venture in 2018, which was backed by Amazon, JPMorgan, and Berkshire Hathaway. Dimon has said the two “hit it off” in 1999, and Bezos even discussed hiring Dimon as Amazon’s president before Dimon chose to stay in banking.

- Michael Dell, CEO of Dell Technologies

Dell worked closely with Dimon and JPMorgan when the bank led the multibillion‑dollar financing for Dell’s $67 billion takeover of tech giant EMC in 2015, the largest tech deal ever at the time.

- Jim Farley, CEO of Ford Motor Company

Farley has publicly warned about U.S. dependence on China for chips and rare earths, arguing it is a strategic vulnerability. In a third-quarter earnings call in October, he told investors he had discussed these issues with U.S. officials as a chip shortage caused by China threatened to impact the automaker.

- Alex Gorsky, former CEO of Johnson & Johnson

Gorsky, most recently the company’s former executive chairman, oversaw the company’s expansion and helped steer J&J through the Covid‑19 vaccine rollout as CEO.

- Phebe Novakovic, CEO of General Dynamics

Novakovic previously worked in the U.S. government in roles at the Central Intelligence Agency and the Department of Defense before moving to the private sector in 2001. After working her way up at General Dynamics, she now heads one of the Pentagon’s major defense contractors.

- Todd Combs, Berkshire Hathaway investment manager, CEO of GEICO

Combs is a longtime Berkshire Hathaway investment manager and CEO of Geico who left Geico this week and is leaving his Berkshire role as well to lead JPMorganChase’s SRI Strategic Investment Group and join the advisory council in early 2026. For years, he was one of Warren Buffett’s top stock pickers.

- Paul Ryan, Partner at Solamere Capital, former Speaker of the U.S. House of Representatives

Ryan is a partner at private-equity firm Solamere Capital and formerly served as Speaker of the U.S. House of Representatives from 2015 to 2019, where he was a key figure on fiscal and economic policy. He previously chaired both the House Budget Committee and the Ways and Means Committee, making him a central Republican figure on fiscal and economic policy and tax legislation.

National security experts

- Condoleezza Rice, former U.S. Secretary of State

Rice is a former U.S. Secretary of State under George W. Bush from 2005 to 2009 and, prior to that, was National Security Adviser. She played a central role in U.S. foreign policy and national‑security decision-making in the 2000s.

- Robert Gates, former U.S. Secretary of Defense

Gates is a former CIA director under former president George H.W. Bush from 1991 to 1993 and former U.S. Secretary of Defense, with a long career in national security and intelligence under both Republican and Democratic presidents.

- Chris Cavoli, retired general

Cavoli is a retired U.S. Army general who most recently served as Supreme Allied Commander Europe and Commander of U.S. European Command, overseeing NATO forces and U.S. military operations in Europe.

- Ann Dunwoody, retired Commanding General of U.S. Army Material Command

Dunwoody is a retired four‑star general and former Commanding General of U.S. Army Materiel Command. She’s the first woman in U.S. history to achieve the rank of four‑star general.

- Paul Nakasone, retired general and former NSA Director

Nakasone is a retired four‑star Army general who led the U.S. Cyber Command and served as director of the National Security Agency and chief of the Central Security Service from 2018 to 2024.

Business

‘Customers don’t care about AI’ — they want to boost cash flow and make ends meet, Intuit CEO says

Published

54 minutes agoon

December 9, 2025By

Jace Porter

While Wall Street and Silicon Valley are obsessed with artificial intelligence, many businesses don’t have the luxury to fixate on AI because they’re too busy trying to grind out more revenue.

At the Fortune Brainstorm AI conference in San Francisco on Monday, Intuit CEO Sasan Goodzari acknowledged the day-to-day priorities of users of his company’s products, such as QuickBooks, TurboTax, Mailchimp and Credit Karma.

“I remind ourselves at the company all the time: customers don’t care about AI,” he told Fortune’s Andrew Nusca. “Everybody talks about AI, but the reality is a consumer is looking to increase their cash flow. A consumer is looking to power their prosperity to make ends meet. A business is trying to get more customers. They’re trying to manage their customers, sell them more services.”

Of course, AI still powers Intuit’s platforms, which help companies and entrepreneurs digest data that’s often stovepiped across dozens of separate applications they juggle. So Intuit declared years ago that it would focus on delivering “done-for-you experiences,” Goodzari said.

On the enterprise side, it means helping businesses manage sales leads, cash flow, accounting, or taxes. On the consumer side, it entails helping users build credit and wealth. Expertise from a real person, or human intelligence (HI), is an essential component as well.

“Customers don’t care about AI,” Goodzari added. “What they care about is ‘Help me grow my business, help me prosper.’ And we have found the only way to do that is to combine technology automating everything for them with human intelligence on our platform that can actually give you the human touch and the advice. And we believe that will be the case for decades to come. But the role of the HI, the human, will change.”

For example, an Intuit AI agent can hand off tasks to humans by helping them follow up with business clients who have overdue invoices or identify which ones typically pay on time.

Ashok Srivastava, Intuit’s chief AI officer, noted that the AI agents on average save customers 12 hours per month on routine tasks. In addition, users get paid five days sooner and are 10% more likely to be paid in full.

“As a person who’s run small businesses in the past, I can tell you numbers like that are very meaningful,” he said. “Twelve more hours means 12 more hours that I can spend building my products, understanding my customers.”

Read more from Fortune Brainstorm AI:

Business

The problem with ‘human in the loop’ AI? Often, it’s the humans

Published

1 hour agoon

December 9, 2025By

Jace Porter

Welcome to Eye on AI. In this edition…AI is outperforming some professionals…Google plans to bring ads to Gemini…leading AI labs team up on AI agent standards…a new effort to give AI models a longer memory…and the mood turns on LLMsand AGI.

Greetings from San Francisco, where we are just wrapping up Fortune Brainstorm AI. On Thursday, we’ll bring you a roundup of insights from the conference. But today, I want to talk about some notable studies from the past few weeks with potentially big implications for the business impact AI may have.

First, there was a study from the AI evaluations company Vals AI that pitted several legal AI applications as well as ChatGPT against human lawyers on legal research tasks. All of the AI applications beat the average human lawyers (who were allowed to use digital legal search tools) in drafting legal research reports across three criteria: accuracy, authoritativeness, and appropriateness. The lawyers’ aggregate median score was 69%, while ChatGPT scored 74%, Midpage 76%, Alexi 77%, and Counsel Stack, which had the highest overall score, 78%.

One of the more intriguing findings is that for many question types, it was the generalist ChatGPT that was the most accurate, beating out the more specialized applications. And while ChatGPT lost points for authoritativeness and appropriateness, it still topped the human lawyers across those dimensions.

The study has been faulted for not testing some of the better-known and most widely adopted legal AI research tools, such as Harvey, Legora, CoCounsel from Thompson Reuters, or LexisNexis Protégé, and for only testing ChatGPT among the frontier general-purpose models. Still, the findings are notable and comport with what I’ve heard anecdotally from lawyers.

A little while ago I had a conversation with Chris Kercher, a litigator at Quinn Emanuel who founded that firm’s data and analytics group. Quinn Emanuel has been using Anthropic’s general purpose AI model Claude for a lot of tasks. (This was before Anthropic’s latest model, Claude Opus 4.5, debuted.) “Claude Opus 3 writes better than most of my associates,” Kercher told me. “It just does. It is clear and organized. It’s a great model.” He said he is “constantly amazed” by what LLMs can do, finding new issues, strategies, and tactics that he can use to argue cases.

Kercher said that AI models have allowed Quinn Emanuel to “invert” its prior work processes. In the past, junior lawyers—who are known as associates—used to spend days researching and writing up legal memos, finding citations for every sentence, before presenting those memos to more senior lawyers who would incorporate some of that material into briefs or arguments that would actually be presented in court. Today, he says, AI is used to generate drafts that Kercher said are by and large better, in a fraction of the time, and then these drafts are given to associates to vet. The associates are still responsible for the accuracy of the memos and citations—just as they always were—but now they are fact-checking the AI and editing what it produces, not performing the initial research and drafting, he said.

He said that the most experienced, senior lawyers often get the most value out of working with AI, because they have the expertise to know how to craft the perfect prompt, along with the professional judgment and discernment to quickly assess the quality of the AI’s response. Is the argument the model has come up with sound? Is it likely to work in front of a particular judge or be convincing to a jury? These sorts of questions still require judgment that comes from experience, Kercher said.

Ok, so that’s law, but it likely points to ways in which AI is beginning to upend work within other “knowledge industries” too. Here at Brainstorm AI yesterday, I interviewed Michael Truell, the cofounder and CEO of hot AI coding tool Cursor. He noted that in a University of Chicago study looking at the effects of developers using Cursor, it was often the most experienced software engineers who saw the most benefit from using Cursor, perhaps for some of the same reasons Kercher says experienced lawyers get the most out of Claude—they have the professional experience to craft the best prompts and the judgment to better assess the tools’ outputs.

Then there was a study out on the use of generative AI to create visuals for advertisements. Business professors at New York University and Emory University tested whether advertisements for beauty products created by human experts alone, created by human experts and then edited by AI models, or created entirely by AI models were most appealing to prospective consumers. They found the ads that were entirely AI generated were chosen as the most effective—increasing clickthrough rates in a trial they conducted online by 19%. Meanwhile, those created by humans and edited by AI were actually less effective than those simply created by human experts with no AI intervention. But, critically, if people were told the ads were AI-generated, their likelihood of buying the product declined by almost a third.

Those findings present a big ethical challenge to brands. Most AI ethicists think people should generally be told when they are consuming content generated by AI. And advertisers do need to negotiate various Federal Trade Commission rulings around “truth in advertising.” But many ads already use actors posing in various roles without needing to necessarily tell people that they are actors—or the ads do so only in very fine print. How different is AI-generated advertising? The study seems to point to a world where more and more advertising will be AI-generated and where disclosures will be minimal.

The study also seems to challenge the conventional wisdom that “centaur” solutions (which combine the strengths of humans and those of AI in complementary ways) will always perform better than either humans or AI alone. (Sometimes this is condensed to the aphorism “AI won’t take your job. A human using AI will take your job.”) A growing body of research seems to suggest that in many areas, this simply isn’t true. Often, the AI on its own actually produces the best results.

But it is also the case that whether centaur solutions work well depends tremendously on the exact design of the human-AI interaction. A study on human doctors using ChatGPT to aid diagnosis, for example, found that humans working with AI could indeed produce better diagnoses than either doctors or ChatGPT alone—but only if ChatGPT was used to render an initial diagnosis and human doctors, with access to the ChatGPT diagnosis, then gave a second opinion. If that process was reversed, and ChatGPT was asked to render the second opinion on the doctor’s diagnosis, the results were worse—and in fact, the second-best results were just having ChatGPT provide the diagnosis. In the advertising study, it would have been good if the researchers had looked at what happens if AI generates the ads and then human experts edit them.

But in any case, momentum towards automation—often without a human in the loop—is building across many fields.

On that happy note, here’s more AI news.

Jeremy Kahn

jeremy.kahn@fortune.com

@jeremyakahn

FORTUNE ON AI

Exclusive: Glean hits $200 million ARR, up from $100 million 9 months back—by Allie Garfinkle

Cursor developed an internal AI help desk that handles 80% of its employees’ support tickets, says the $29 billion startup’s CEO —by Beatrice Nolan

HP’s chief commercial officer predicts the future will include AI-powered PCs that don’t share data in the cloud —by Nicholas Gordon

OpenAI COO Brad Lightcap says code red will ‘force’ the company to focus, as the ChatGPT maker ramps up enterprise push —by Beatrice Nolan

AI IN THE NEWS

Trump allows Nvidia to sell H200 GPUs to China, but China may limit adoption. President Trump signaled he would allow exports of Nvidia’s high-end H200 chips to approved Chinese customers. Nvidia CEO Jensen Huang has called China a $50 billion annual sales opportunity for the company, but Beijing wants to limit the reliance of its companies on U.S.-made chips, and Chinese regulators are weighing an approval system that would require buyers to justify why domestic chips cannot meet their needs. They may even bar the public sector from purchasing H200s. But Chinese companies often prefer to use Nvidia chips and even train their models outside of China to get around U.S. export controls. Trump’s decision has triggered political backlash in Washington, with a bipartisan group of senators seeking to block such exports, though the legislation’s prospects remain uncertain. Read more from the Financial Timeshere.

Trump plans executive order on national AI standard, aimed at pre-empting state-level regulation. President Trump said he will issue an executive order this week creating a single national artificial-intelligence standard, arguing that companies cannot navigate a patchwork of 50 different state approval regimes, Politico reported. The move follows a leaked November draft order that sought to block state AI laws and reignited debate over whether federal rules should override state and local regulations. A previous attempt to add AI-preemption language to the year-end defense bill collapsed last week, prompting the administration to return to pursuing the policy through executive action instead.

Google plans to bring advertising to its Gemini chatbot in 2026. That’s according to a report in Adweek that cited information from two unnamed Google advertising clients. The story said that details on format, pricing, and testing remained unclear. It also said the new ad format for Gemini is separate from ads that will appear alongside “AI Mode” searches in Google Search.

Former Databricks AI head’s new AI startup valued at $4.5 billion in seed round. Unconventional AI, a startup cofounded by former Databricks AI head Naveen Rao, raised $475 million in a seed round led by Andreessen Horowitz and Lightspeed Venture Partners at a valuation of $4.5 billion—just two months after its founding, Bloomberg News reported. The company aims to build a novel, more energy-efficient computing architecture to power AI workloads.

Anthropic forms partnership with Accenture to target enterprise customers. Anthropic and Accenture have formed a three-year partnership that makes Accenture one of Anthropic’s largest enterprise customers and aims to help businesses—many of which remain skeptical—realize tangible returns from AI investments, the Wall Street Journalreported. Accenture will train 30,000 employees on Claude and, together with Anthropic, launch a dedicated business group targeting highly regulated industries and embedding engineers directly with clients to accelerate adoption and measure value.

OpenAI, Anthropic, Google, and Microsoft team up for new standard for agentic AI. The Linux Foundation is organizing a group called the Agentic Artificial Intelligence Foundation with participation from major AI companies, including OpenAI, Anthropic, Google, and Microsoft. It aims to create shared open-source standards that allow AI agents to reliably interact with enterprise software. The group will focus on standardizing key tools such as the Model Context Protocol, OpenAI’s Agents.md format, and Block’s Goose agent, aiming to ensure consistent connectivity, security practices, and contribution rules across the ecosystem. CIOs increasingly say common protocols are essential for fixing vulnerabilities and enabling agents to function smoothly in real business environments. Read more here from The Information.

EYE ON AI RESEARCH

Google has created a new architecture to give AI models longer-term memory. The architecture, called Titans—which Google first debuted at the start of 2025 and which Eye on AI covered at the time—is paired with a framework named MIRAS that is designed to give AI something closer to long-term memory. Instead of forgetting older details when its short memory window fills up, the system uses a separate memory module that continually updates itself. The system assesses how surprising any new piece of information is compared to what it has stored in its long-term memory, updating the memory module only when it encounters high surprise. In testing, Titans with MIRAS performed better than older models on tasks that require reasoning over long stretches of information, suggesting it could eventually help with things like analyzing complex documents, doing in-depth research, or learning continuously over time. You can read Google’s research blog here.

AI CALENDAR

Jan. 6: Fortune Brainstorm Tech CES Dinner. Apply to attend here.

Jan. 19-23: World Economic Forum, Davos, Switzerland.

Feb. 10-11: AI Action Summit, New Delhi, India.

BRAIN FOOD

At NeurIPS, the mood shifts against LLMs as a path to AGI. The Information reported that a growing number of researchers attending NeurIPS, the AI research field’s most important conference—which took place last week in San Diego (with satellite events in other cities)—are increasingly skeptical of the idea that large language models (LLMs) will ever lead to artificial general intelligence (AGI). Instead, they feel the field may need an entirely new kind of AI architecture to advance to more human-like AI that can continually learn, can learn efficiently from fewer examples, and can extrapolate and analogize concepts to previously unseen problems.

Figures such as Amazon’s David Luan and OpenAI co-founder Ilya Sutskever contend that current approaches, including large-scale pre-training and reinforcement learning, fail to produce models that truly generalize, while new research presented at the conference explores self-adapting models that can acquire new knowledge on the fly. Their skepticism contrasts with the view of leaders like Anthropic CEO Dario Amodei and OpenAI’s Sam Altman, who believe scaling current methods can still achieve AGI. If critics are correct, it could undermine billions of dollars in planned investment in existing training pipelines.

Kris Boyd Shooting Suspect Charged With Attempted Murder

Ambitious Jaded London appoints Verde Digital to drive Gen Z search growth

Meet the Florida Congressional candidate masquerading as a Democrat

Trending

-

Politics8 years ago

Politics8 years agoCongress rolls out ‘Better Deal,’ new economic agenda

-

Entertainment8 years ago

Entertainment8 years agoNew Season 8 Walking Dead trailer flashes forward in time

-

Politics8 years ago

Politics8 years agoPoll: Virginia governor’s race in dead heat

-

Entertainment8 years ago

Entertainment8 years agoThe final 6 ‘Game of Thrones’ episodes might feel like a full season

-

Entertainment8 years ago

Entertainment8 years agoMeet Superman’s grandfather in new trailer for Krypton

-

Politics8 years ago

Politics8 years agoIllinois’ financial crisis could bring the state to a halt

-

Business8 years ago

Business8 years ago6 Stunning new co-working spaces around the globe

-

Tech8 years ago

Tech8 years agoHulu hires Google marketing veteran Kelly Campbell as CMO